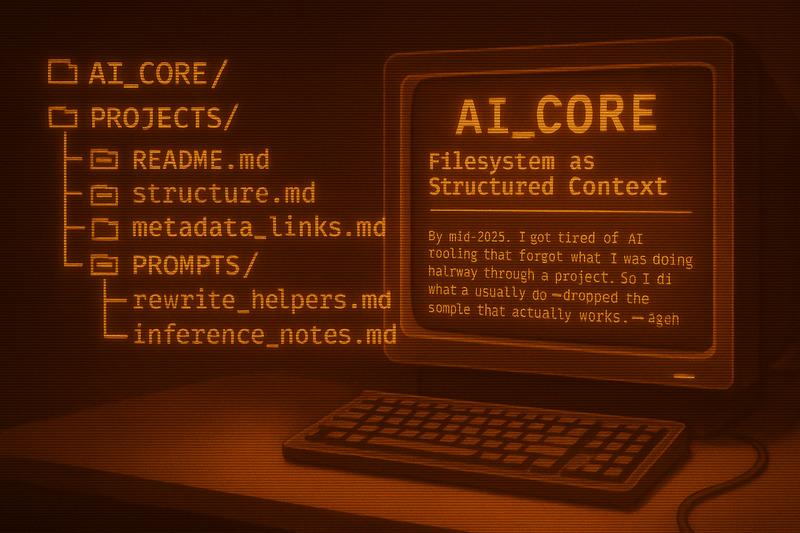

AI_CORE: Filesystem as Structured Context

By mid-2025, I got tired of AI tooling that forgot what I was doing halfway through a project. So I did what I usually do—dropped the bloat, ignored the trend, and built something simple that actually works.

AI_CORE is a local-file-based context system that uses folder names, Markdown, and some light TOML to keep my thoughts—and the AI’s—connected and grounded.

🧠 Summary

No vector databases. No plugins. No 3rd-party APIs.

Just folders, filenames, README.md, and frontmatter that link everything together.

The folder structure is the context boundary.

The files are the memory.

Everything sits where it belongs—where you can see it, edit it, track it, and run inference directly against it.

❌ Pain Points This Solves

I built this because I got sick of:

- AI editors that make dumb mistakes (deleting the function above, duplicating the header, losing track of the job)

- LLMs forgetting what they just did 3 prompts ago

- RAG systems bringing in totally irrelevant noise because a chunk happened to have the same keyword

Most AI tools feel great until you try to work on something bigger than a paragraph. Then everything breaks.

🧪 Previous Attempts

Tried a few things before this:

- Monolithic

README.mdfiles with inline notes and links — gets messy fast, hard to chunk. - RAG over Markdown — hit-and-miss, noisy, adds complexity.

- SQLite — fast and structured, but not something I want to hand-edit or commit.

All of them required extra glue. None of them felt like working.

💡 What AI_CORE Does

So I went the other direction. No dependencies. No embeddings. Just… structure.

- Each directory = contextual scope

- Each Markdown file = thought or memory chunk

- Frontmatter (TOML) = metadata and link hooks

- File/folder names = semantic hints the LLM can track

- Tools/scripts = generated in place by the AI itself

The LLM sees the structure, reads the files, follows the references, and writes in context.

Example layout:

"""

AI_CORE/

├── PROJECTS/

│ └── ai_filesystem_context/

│ ├── README.md

│ ├── structure.md

│ ├── metadata_links.md

│ └── prompts/

│ ├── rewrite_helpers.md

│ └── inference_notes.md

"""

⚙️ Effectiveness (So Far)

How well it works really depends on the LLM:

- ChatGPT-4o: handles file references, TOML, and scoped reasoning well. It “feels” like it gets it.

- Claude 3.5/3.7 Sonnet: great for structure + narrative reasoning. A little verbose, but capable.

Anything smaller? Kind of a mess. Local models don’t do so hot unless heavily guided—and even then, they’ll miss references or ignore the structure completely.

💬 Observations

- It’s way easier to build with this than talk to an AI with amnesia.

- I can version control it, diff it, grep it, and the AI can too.

- The AI can write tools inside it, then call them later.

It’s not pretty. It’s not sexy. But it scales with me, not against me.

🧱 Why Not Use a Database?

Because I don’t want to explain my notes to a database. I want to see them. Edit them. Let the AI read them as they are. No translation layer. No middleware. Just thought -> file -> model.

Plus, it makes it dead simple to:

- Generate code that lives next to the prompt that made it

- Modify itself (the AI writes new

.mdfiles with frontmatter) - Store toolchains inline with reasoning logs

⚠️ Limits

- Requires discipline in file naming and folder hierarchy

- Context window still caps you (but at least it’s better structured)

- Doesn’t magically fix dumb LLM behavior—but at least you know where it went wrong

- Still manual-ish without a smart CLI wrapper (which I’m building)

🧓 Final Thoughts

AI_CORE is my brain’s garage. Sometimes it’s messy. But every tool has a place. Every wire has a label. And the AI doesn’t get to guess what I mean anymore—it has to read the signs.

If you’re tired of forgetting what you were working on… If you want your tools to respect your structure instead of ignoring it… Try a file tree.

Turns out, the best memory system was the local filesystem all along.